We frequently discuss the revolutionary impact of artificial intelligence (AI) as numerous entities, ranging from governments to corporations, rush to harness AI in various sectors like recruitment, policing, criminal justice, healthcare, product development, and marketing. Generative tools such as Chat GPT have also become a staple in our daily digital interactions. However, Ruhi Khan, a researcher at the London School of Economics (LSE) Economic and Social Research Council (ESRC), casts a spotlight on a critical issue: despite the strides in AI, its embedded gender and racial biases significantly endanger women. Khan is championing a worldwide feminist movement to confront these challenges by promoting awareness, inclusivity, and regulatory measures.

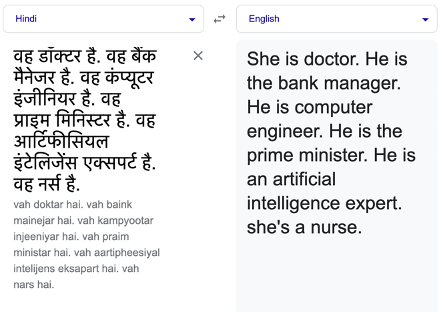

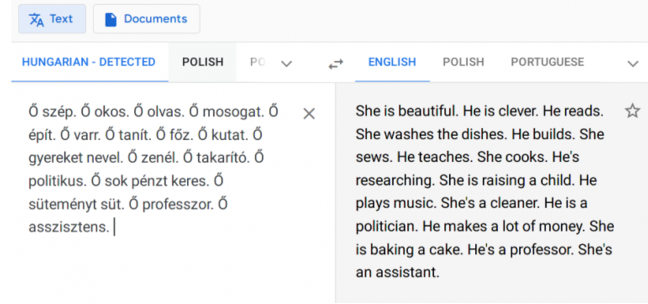

But does AI genuinely harbor gender biases? Khan puts this to the test with a simple experiment on Google Translate, inputting sentences with gender-neutral pronouns and analyzing the translation outcomes.

She noted particular biases in translations from Hindi to English, which is not an isolated case. Similar biases are evident in translations from other languages, such as Hungarian to English, indicating a pervasive issue.

The problem might lie in Natural Language Processing (NLP), where societal stereotypes could be perpetuated by biases ingrained in the training data used by programmers. These biases could inadvertently link gender to specific professions, roles, or behaviors, further cementing these stereotypes within the system.

Khan illustrates the manifestation of AI’s gender bias across various domains. In the recruitment sector, AI algorithms might unintentionally perpetuate historical gender biases, sidelining female candidates. A case in point is Amazon’s discovery that its recruitment software was biased towards male candidates based on a decade’s worth of predominantly male-submitted resumes, inadvertently learning to favor males over females. In healthcare, AI diagnostic systems may not be adequately sensitive to female patients’ symptoms due to a historical focus on male subjects in research and testing. This leads to disparities in healthcare advice, where men might be promptly directed to emergency services while women receive suggestions for stress relief or to seek depression counseling at a later time. In automotive safety, the design of safety equipment like seatbelts and airbags has historically been based on male data, neglecting women’s unique physiological makeup and potentially increasing their risk in traffic accidents.

Acknowledging these issues is the initial step toward change.

These problems are not random but are rooted in the data sets employed in AI and machine learning system development, which often lack diversity and mirror historical gender biases and stereotypes. Khan advocates for a novel movement, encouraging women from diverse backgrounds globally to actively engage in AI training, shifting from silent observers to proactive participants. Women should not merely consume technology but should shape it. Through education, engagement, and advocacy, we can steer AI towards a path of fairness and inclusiveness.

Photo source: unsplash.com、Media@LSE、Shutterstock、The Minderoo Centre for Technology and Democracy